Run New and Failing Tests on File Change with Pytest

When refactoring code or doing test-driven development, a test runner that watches file changes and runs only failed tests can save a pile of time. (Like most people, I measure time in piles of hourglass sand.)

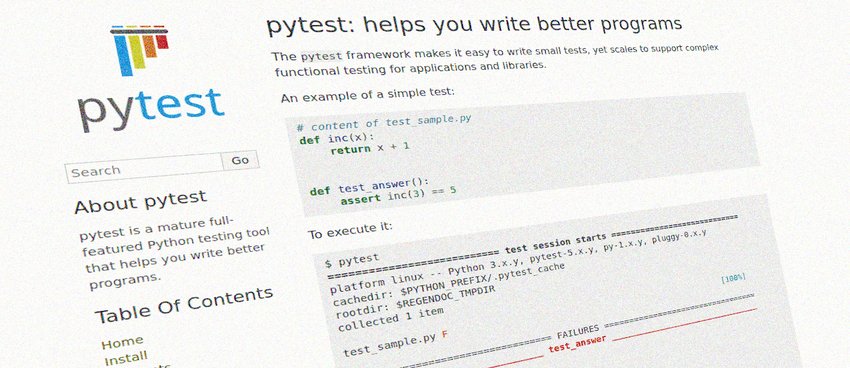

Say you're working on a Python project that has 200 unit tests and uses pytest as its test runner.

To add tests for a new feature, you might:

- Run the existing test suite to ensure that all 200 tests pass

- Watch the test output as though the act of observing increases the likelihood of the tests passing

- Write code for the new feature or bugfix

- Write unit tests for the new code—say, 5 tests

- Run the test suite to ensure that all 205 tests pass

- Watch the test output in realtime again

- Repeat steps 2-3 for each feature or bugfix, increasing the number of tests accordingly

That workflow executes 405 tests in order to add 5, and that's if the tests all pass on the first try. Add 205 test executions for every failing test.

One way to limit the number of test executions is to run only the new tests. If the 200 existing tests passed, and the 5 new tests pass, then we've got 205 passing tests, right?

Perhaps. The risk in that assumption is that code changes to accommodate the new feature may break existing tests. You may create a merge request only to have the feature branch fail the unit test CI stage, prompting a frantic force-push of an updated branch in an attempt to erase all evidence of your failure and bury your shame.

So, how can we run a Python unit test suite as we're making code changes without running every test every time?

Watch and learn.

Watch

To save programmer paws superfluous keypresses and to preserve CPU cycles, many test runners support a 'watch' mode, which suspends the test runner after executing the test suite, and starts the suite again when a file changes.

Jest, a JavaScript test runner maintained by Facebook, for example, ships with this capability, giving users control over which tests to run:

Watch Usage

› Press a to run all tests.

› Press f to run only failed tests.

› Press o to only run tests related to changed files.

› Press q to quit watch mode.

› Press t to filter by a test name regex pattern.

› Press p to filter by a filename regex pattern.

› Press Enter to trigger a test run.

For pytest, pytest-watch is a popular wrapper around pytest that "runs pytest, and re-runs it when a file in your project changes". This provides Pytest 'watch' powers.

To run only failed tests using pytest-watch, we'll have to look elsewhere.

Learn

Thankfully we won't have to look very far.

Like Jest, Pytest itself has the ability to run only failed tests using the --last-failed/--lf flag. With this flag, Pytest will learn from its past experience and:

rerun only the tests that failed at the last run (or all if none failed). pytest -h

To use this with pytest-watch, include it after the -- separator that denotes where pytest-watch arguments stop and where Pytest arguments start:

ptw -- --last-failed

Now we can run only failed tests on file changes, but what if we add new tests?

Solution

With --last-failed, pytest uses its cache plugin to run only tests that it knows failed during the last run. If any failing tests were added or any passing tests were changed and now fail, however, Pytest won't run them because they won't be in the test cache from the previous run.

So, is there a way to run failed tests and changed tests?

Yes, Pytest supports this, too, by combining the --last-failed and --new-first flags. With the new-first flag, Pytest will:

run tests from new files first, then the rest of the tests, sorted by file mtime. pytest -h

When running new-first with the last-failed flag, "the rest of the tests" refers to the failing tests instead of the entire test suite.

Here's what the command looks like:

ptw -- --last-failed --new-first

And here's an example run:

$ ptw -- --last-failed --new-first

[Sat Feb 15 17:58:36 2020] Running: py.test --last-failed --new-first

================== test session starts ===================

platform linux -- Python 3.8.1, pytest-5.3.5, py-1.8.1, pluggy-0.13.1

rootdir: /home/john/Code/pytest-watch-failed

collected 2 items / 1 deselected / 1 selected

run-last-failure: rerun previous 1 failure (skipped 2 files)

tests/test_things.py F [100%]

======================== FAILURES ========================

________________ test_universe_is_broken _________________

def test_universe_is_broken():

> assert True is False

E assert True is False

tests/test_things.py:6: AssertionError

============ 1 failed, 1 deselected in 0.02s =============

Give it a try!

Caveats

Pytest discovers test files in the current directory—or the rootdir, if configured—and ignores tests using the following settings:

--ignore=path ignore path during collection (multi-allowed).

--ignore-glob=path ignore path pattern during collection (multi-allowed).

If you specify a directory for pytest-watch to monitor, it won't know when code changes happen in other directories unless you specify those directories, too.

So if you want to watch only a specific subdirectory of your test folder, instead of doing this:

ptw tests/fruit/raspberry -- --last-failed --new-first

specify your source directory ('app' in this case) so pytest-watch knows when source files change:

ptw tests/fruit/raspberry app -- --last-failed --new-first

Alternatives

pytest-watch isn't the only game in town, so it's worth taking a quick look at other Pytest plugins that provide some of the same features to the above setup.

pytest-xdist

pytest-xdist is a "distributed testing plugin" for Pytest. Perhaps its best-known feature is that it can leverage multiple CPUs by running tests in parallel, with a separate worker per CPU.

pytest-xdist can also run tests in a subprocess and re-run failing tests on file change, similar to using pytest-watch with Pytest's last-failed flag. xdist's --looponfail mode provides this functionality:

run your tests repeatedly in a subprocess. After each run pytest waits until a file in your project changes and then re-runs the previously failing tests. This is repeated until all tests pass after which again a full run is performed. pytest-xdist README

Because pytest-watch with Pytest's --last-failed flag can accomplish this, unless you need parallelized test runs, the pytest-watch setup above may be simpler.

pytest-testmon

pytest-testmon uses code coverage from Coverage.py to run only those tests impacted by recent file changes. So if you have tests for two functions in ./app/fruit/raspberry.py, changing that file will run tests that use those functions and will skip all other tests.

This plugin can be combined with pytest-watch to run on each file change instead of running tests affected by changes to source files since the last Pytest run. This is a good option for minimizing the number of tests run when refactoring code against an up-to-date test suite, especially if you already calculate code coverage.

When fixing and adding new tests, python-testmon might be overkill given that it adds multiple dependencies. It's still good to know about, and it's worth a try to see if it fits into your workflow.

Wrap-up

Although Pytest can't make us learn from our past mistakes, with its --last-failed and --new-first flags, it can tell us what those past mistakes are and whether to count newly-created tests among them. Using those built-in features of Pytest along with pytest-watch provides a powerful and flexible way to select which tests to run, and, importantly, which tests to skip.

I couldn't think of a play on words with which to end this article, so I'll settle for a rhyming couplet in iambic pentameter:

With Pytest choose which tests you want to skip.

I wish that I had craft'd a clever quip.